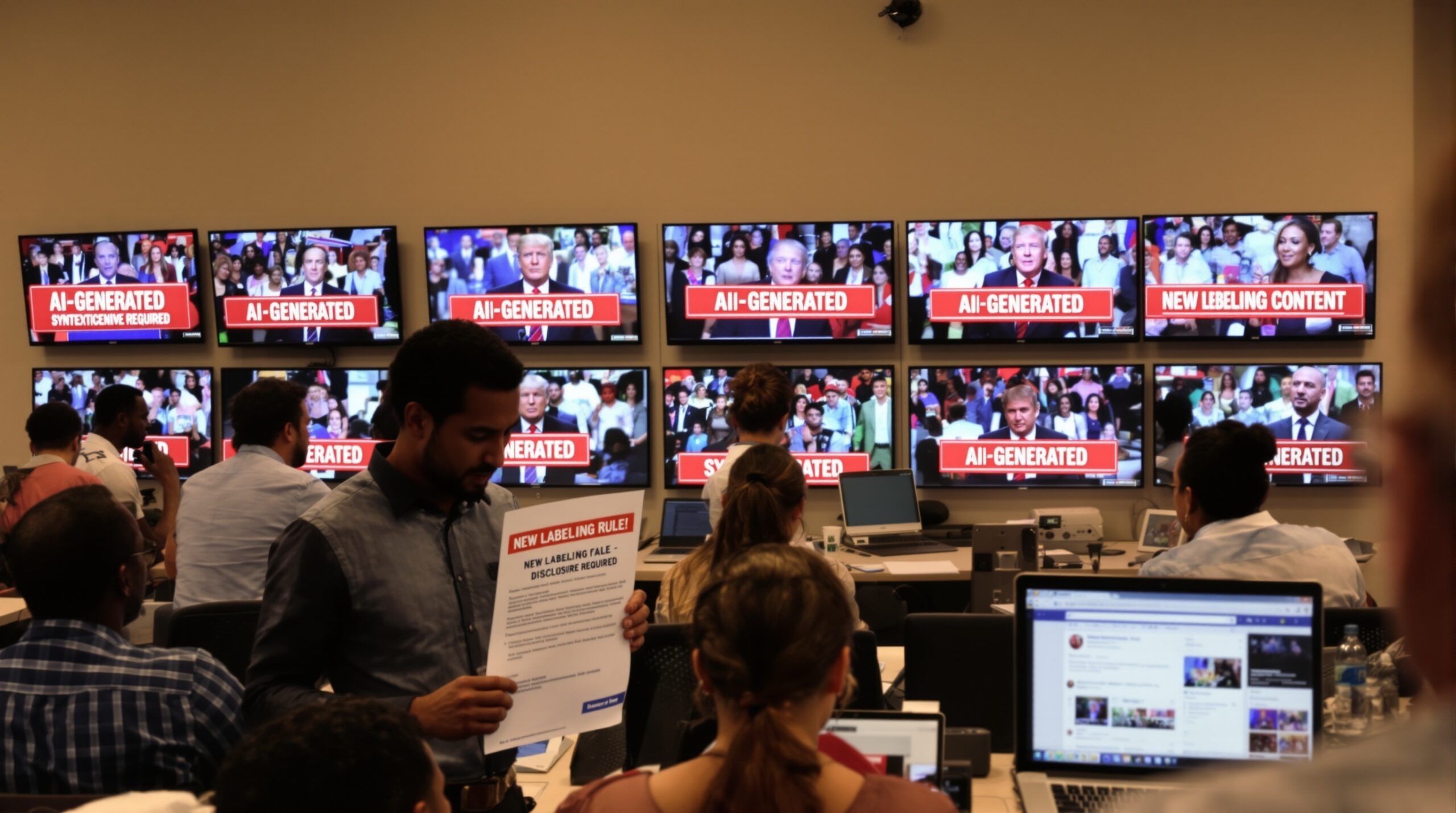

Regulators are moving to require labels on AI-generated political ads across television and social platforms. These efforts target synthetic content that could mislead voters during tightly contested campaigns. The push reflects rising concern over manipulated audio, video, and images. Campaigns, consultants, and platforms face new disclosure obligations under these proposals. The rules promise more transparency as election timelines accelerate.

Why Regulators Are Acting Now

Generative tools make political deepfakes cheap, fast, and convincing. Misleading content can spread widely before campaigns or fact-checkers respond. Regulators view labeling as a pragmatic transparency safeguard for voters. Labels do not ban speech but inform audiences about synthetic elements. This distinction guides many regulatory proposals currently under consideration.

Election administrators worry about last-minute deceptive videos. These could mimic candidates and fabricate positions or crisis responses. False narratives can suppress turnout or inflame tensions. Labels aim to flag altered content at the point of exposure. This goal shapes disclosure standards across media channels.

What Counts as AI-Generated or Synthetic

Policies generally target materially altered or AI-synthesized content. Examples include voice cloning, face swaps, and generated images. The trigger is whether viewers could be misled about reality. Routine edits, color correction, and cropping typically fall outside. Regulators stress plain-language definitions to support compliance and enforcement.

Standards often cover both full fabrication and partial manipulation. A real video with an altered soundtrack could still qualify. The same applies to composites mixing genuine and synthetic footage. Consistency matters because campaigns use varied production workflows. Clear definitions reduce disputes over borderline cases.

Scope Across TV, Radio, and Digital Platforms

Broadcast rules focus on on-air disclaimers and public file records. Cable and satellite distributors face similar obligations in many proposals. Digital platforms emphasize on-screen labels and metadata. Social platforms pair labels with policy enforcement and detection tools. These efforts aim to reach voters wherever ads appear.

Campaigns often run integrated programs across media. A disclosure standard that spans channels reduces confusion. Cross-platform alignment can also lower compliance costs. That alignment helps media buyers plan consistent creative packages. Layered disclosures remain possible where statutes require stricter treatment.

Emerging Federal Actions and Rulemaking

Federal regulators have signaled growing interest in AI ad transparency. They are exploring requirements for clear, conspicuous disclosures in political communications. These proposals often cover candidate and issue advertising. Some rulemakings also address sponsorship identification and recordkeeping updates. Agencies coordinate with election officials and consumer protection authorities.

Public comment processes inform the final rules. Stakeholders submit data on voter understanding and deception risks. Technology providers describe watermarking and provenance solutions. Broadcasters and platforms assess operational burdens and timelines. This feedback shapes disclosure wording and technical feasibility.

State-Level Laws and Experiments

Several states have enacted synthetic media rules for elections. Many laws require labels on AI-generated political communications. Some laws restrict deceptive deepfakes near voting periods. Legislatures seek to balance transparency with First Amendment protections. Effective state approaches often guide broader policy adoption.

Definitions and remedies vary across states. Some rely on private rights of action and takedown mechanisms. Others pursue criminal penalties or civil fines. Safe harbors for satire and parody appear in several statutes. These differences complicate multistate campaign planning and compliance.

Platform Policies and Industry Standards

Major platforms have introduced disclosure requirements for election ads. Policies often mandate labels for synthetic or materially altered content. Enforcement combines advertiser attestations and automated detection tools. Platforms also experiment with provenance signals in media files. These efforts seek to bolster trust without blocking speech.

Search and video ad networks require explicit disclaimers for altered election content. Platforms may also restrict deceptive deepfakes that mislead voters. Some networks maintain political ad archives for accountability. Transparency centers help researchers review creative and targeting. These practices complement regulator-led approaches.

How Disclosures Would Appear to Voters

Proposals emphasize clear, plain-language labels on screen. Labels should be readable without pausing or rewinding. Audio disclosures should be spoken at a normal cadence. Duration requirements help viewers notice the information. Placement rules prevent burying labels in corners or end cards.

Standard phrasing might read “This ad contains AI-generated content.” Some proposals require specifying the altered elements. Additional detail helps viewers interpret what they see or hear. Mobile layouts require careful size and contrast choices. Accessibility rules support screen readers and captions.

Compliance Workflows for Campaigns and Vendors

Campaigns will need disclosure checklists for creative development. Producers should tag assets that include synthetic elements. Media teams must ensure labels match each placement. Legal teams should document decisions and keep version histories. These steps reduce last-minute changes and compliance errors.

Vendors can add disclosure fields to insertion orders. Asset management systems can track provenance and edits. Watermarking and content credentials support audit trails. Post-campaign reviews should assess disclosure accuracy and performance. Lessons learned can refine future production and training.

Detection, Watermarking, and Content Credentials

Labeling rules encourage investment in authenticity technologies. Watermarking embeds signals into generated media files. Content credentials attach tamper-evident metadata to assets. Provenance standards help platforms verify origin and edits. These tools reduce disputes over whether content is synthetic.

No single method reliably detects every manipulation. Adversaries can compress, crop, or remix files to evade detection. Layered approaches improve reliability in production environments. Disclosure policies work best with robust provenance pipelines. Industry collaboration accelerates adoption and interoperability.

Enforcement and Penalties

Regulators plan penalties for noncompliant ads and sponsors. Sanctions can include fines, takedowns, and file corrections. Repeat violations may trigger stricter scrutiny and audits. Platforms can suspend advertisers that evade disclosure rules. Enforcement aims to deter deceptive campaign practices.

Adversarial scenarios complicate enforcement timing. Harmful deepfakes often spread quickly at critical moments. Rapid response protocols help contain damage during crises. Coordination with platforms accelerates takedowns and corrections. Public advisories educate voters about ongoing manipulation tactics.

Legal Considerations and Constitutional Limits

Disclosure rules must meet constitutional tests for compelled speech. Courts examine whether requirements are clear and narrowly tailored. The government interest in preventing deception is well recognized. Vague or burdensome mandates face higher legal risks. Agencies draft language carefully to survive judicial review.

Exemptions for satire and artistic expression are common. These help protect political commentary and humor. Regulators distinguish deception from protected expression. Bright-line definitions support consistent, fair enforcement. Clarity reduces chilling effects on legitimate political speech.

Implications for Voter Trust and Campaign Strategy

Labels give voters more context about persuasive messages. Transparency can reduce confusion during information spikes. Campaigns may recalibrate creative strategies toward authentic footage. Authenticity appeals often boost credibility with skeptical audiences. Disclosure compliance can become a competitive advantage.

Consultants will test label wording and placement for effectiveness. They will study whether labels change voter interpretation. Measurement can guide future creative optimization and formats. Clear disclosures may reduce backlash to experimental content. Responsible innovation still remains possible under labeling rules.

What Campaigns Should Do Now

Teams should inventory creative pipelines and tools. They should identify where synthetic content may enter production. Training can help staff recognize disclosure triggers. Contracts should obligate vendors to follow labeling rules. These steps prepare campaigns for new requirements.

Campaigns should also coordinate with platform policy teams. Early dialogue clarifies placement-specific label formats. Pilot projects can validate watermarking and credentials workflows. Crisis plans should include deepfake response protocols. Prepared organizations adapt more smoothly when rules take effect.

Looking Ahead

Labeling policy will continue evolving with technology and elections. Regulators will refine definitions based on case experience. Platforms will update enforcement and transparency tools. Campaigns will adjust creative practices to meet voter expectations. The shared objective remains a more informed electorate.

Disclosure requirements do not eliminate manipulation risks. They do provide warnings at the moment of persuasion. Combined with media literacy, labels strengthen democratic resilience. Coordinated action across sectors increases impact and consistency. Continued vigilance will shape trustworthy political communication online and offline.

Bottom Line

Regulators are moving to require labels on AI-generated political ads across channels. Campaigns must disclose synthetic elements clearly and promptly. Platforms and broadcasters will enforce standardized, conspicuous warnings. These steps aim to protect voters without silencing political debate. Transparency is becoming a core expectation in modern campaigning.